Shift Left: Headless Data Architecture, Part 2.

This article is reproduced in entirety with permission from Confluent, of which Sida4 / 4impact is a proud APAC Integration Partner.

Original author Adam Bellemare.

Headless data architecture was created via a shift-left approach

The headless data architecture is the formalisation of a data access layer at the center of your organisation. Encompassing both streams and tables, it provides consistent data access for both operational and analytical use cases. Streams provide low-latency capabilities to enable timely reactions to events, while tables provide higher-latency but extremely batch-efficient querying capabilities. You simply choose the most relevant processing head for your requirements and plug it into the data.

Building a headless data architecture requires us to identify the work we’re already doing deep inside our data analytics plane, and shift it to the left. We take work that you’re already doing downstream, such as data cleanup, structuring, and schematisation, and push it upstream into the source system. The data consumers can rely on a single standardised set of data, provided through both streams and tables, to power their operations, analytics, and everything in between.

Significantly reduces downstream costs by shifting the work to the left. It provides a simpler and more cost-effective way to create, access, and use data, particularly in comparison to the traditional multi-hop approach.

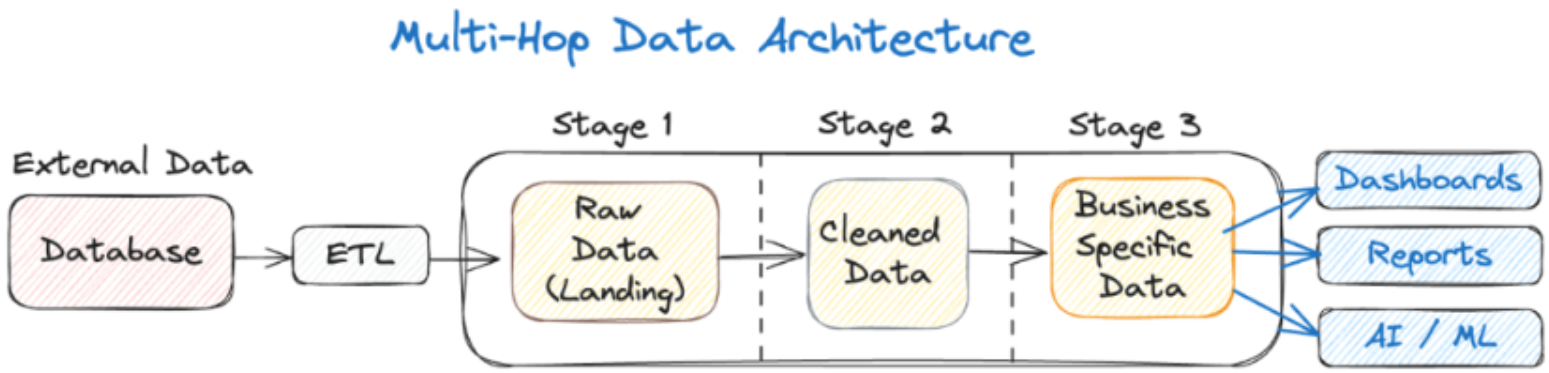

The multi-hop and medallion data architectures

If you’re like the vast majority of organisations, you already have some established extract, transform, load (ETL) data pipelines, a data lake, a data warehouse, and/or a data lakehouse. Data analysts in the analytical plane require specialised tools, different from those used by the software developers in the operational plane. This general “move data from left to right” structure is commonly known as a multi-hop data architecture.

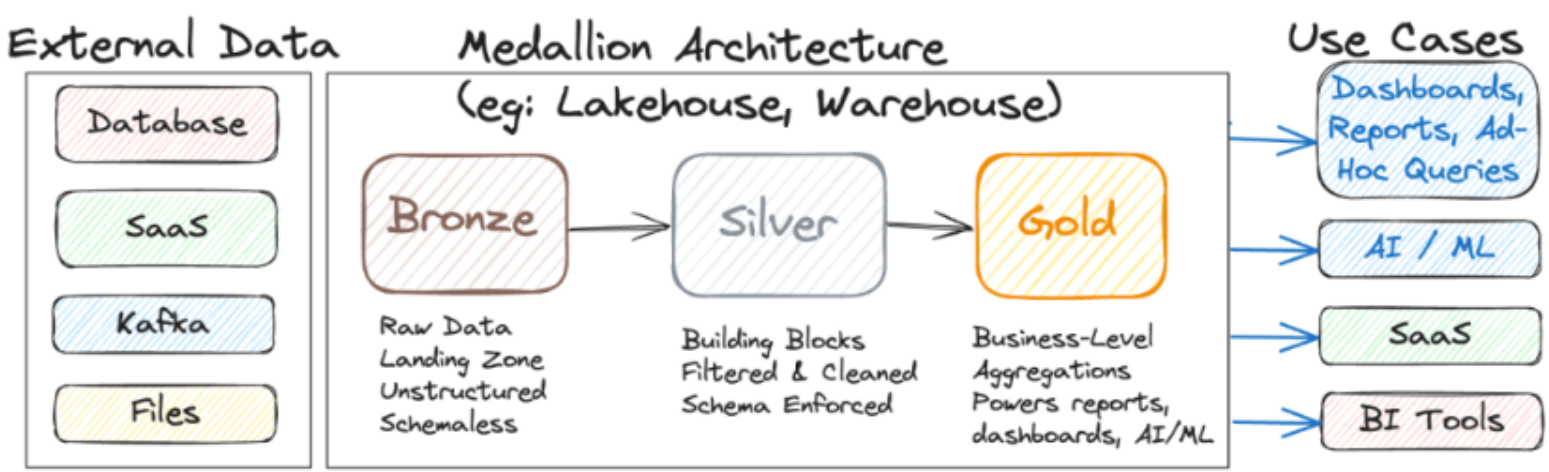

The

medallion architecture is likely the most popular form of the multi-hop architecture. It has three levels of data quality, represented by the colors of Olympic medals—bronze, silver, and gold. The bronze layer acts as the landing zone, silver as the cleaned and well-defined data layer (Stage 2), and gold as the business-level aggregated data sets (Stage 3).

The data in Stage 1 is typically raw and unstructured data. It is then cleaned up, schematised, and standardised, then written into Stage 2. From here, it can be further aggregated, grouped, denormalised, and processed, to create business-specific data sets in Stage 3 that go on to power dashboards, reports, and provide training data for AI and machine learning models.

The problems with multi-hop architectures

First, multi-hop architectures are slow because they are most commonly implemented with periodically triggered batch processes. Data must get from source to bronze before the next hop can begin.

For example, if you pull data into your bronze layer every 15 minutes, each subsequent hop can only be every 15 minutes, as the data moves from stage to stage only as fast as its slowest part. Even if you dial it down to 1 minute per hop, it’s still going to be at least 3 minutes before that data is available in the gold layer (not counting processing time).

Second, multi-hop architectures are expensive because each hop is yet another copy of data, which requires processing power to load it, process it, and write it to the next stage in the hop. This adds up quickly.

Third, multi-hop architectures tend to be brittle because different people tend to own the different stages of the workflow, the source database, and the final use cases. Very strong coordination is necessary to prevent breakages. And in practice, this tends to be difficult to scale.

Fourth, by making it a responsibility of the data analysts to get their own data, you can end up with similar-yet-different data pipelines. Each team may build their own custom pipelines to avoid distributed ownership issues, but this can result in a sprawl of similar yet different pipelines. The larger the company, the more common similar-yet-different pipelines and data sets become. It can become challenging to find all the available data sets.

But this leads to our fifth problem, which is similar-yet-different data sets. Why are there multiples? Which one should I use? Is this data set still maintained, or is it a zombie data set that’s still regularly updated but without anyone overseeing it? The problem comes to a head when you have important computations that disagree with each other, due to reliance on data sets that should be identical but are not. Providing conflicting reports, dashboards, or metrics to customers will result in a loss of trust, and in a worst-case scenario, loss of business and even legal action.

Even if you sort out all of these problems—reducing latency, reducing costs, removing duplicate pipelines and data sets, and eliminating break-fix work—you still haven’t provided anything that operations can use. They’re still on their own, upstream of your ETLs, because all of the cleaning, structuring, remodeling, and distribution work is only really useful for those in the data analytics space.

Apache Iceberg key components:

- The first component is table storage and optimisation. Iceberg stores all the data for building tables, typically using readily available cloud storage like Amazon S3. Iceberg manages the storage and maintenance of the data, including optimisations like file compaction and versioning.

- The

Iceberg catalog, which contains metadata, schemas, and table information, such as what tables you have and where they are. You declare your tables in your Iceberg catalog, such that you can plug in your processing and query engines to access the underlying data.

- Transactions. Iceberg supports transactions and concurrent reads and writes so that multiple heads can do heavy-duty work without affecting each other.

- Iceberg provides time travel capabilities. You can execute queries against a table at a specific point in time, which makes Iceberg very useful for auditing, bug fixing, and regression testing.

- Iceberg provides a central pluggable data layer. You can plug in your open source options like Flink, Trino, Presto, Hive, Spark, and DuckDB, or popular SaaS options like BigQuery, Redshift, Snowflake, and Databricks.

How you integrate with these services varies, but typically relies on replicating metadata from the Iceberg catalog, so your processing engine can figure out where the files are, and how to query them. Consult your processing engine’s documentation for Iceberg integration for more information.

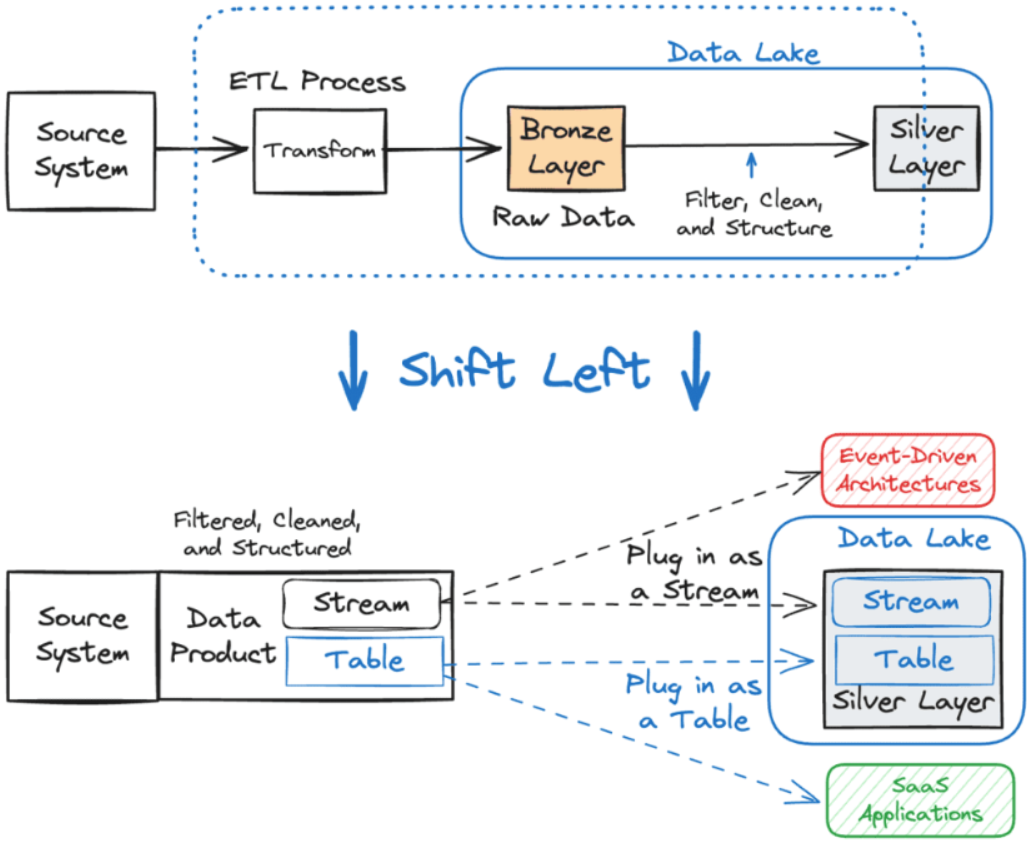

Shift left for a headless data architecture

Building a headless data architecture requires a rethink of how we circulate, share, and manage data in our organisations—a shift left. We extract the ETL->bronze->silver work from downstream and put it upstream inside our data products, much closer to the source.

A stream-first approach provides data products with sub-second data freshness, in contrast to the periodic, ETL-produced data sets that are at best minutes old and outdated. By shifting left, you can make data access cheaper, easier, and faster to use all across your company.

Building the headless data architecture with data products

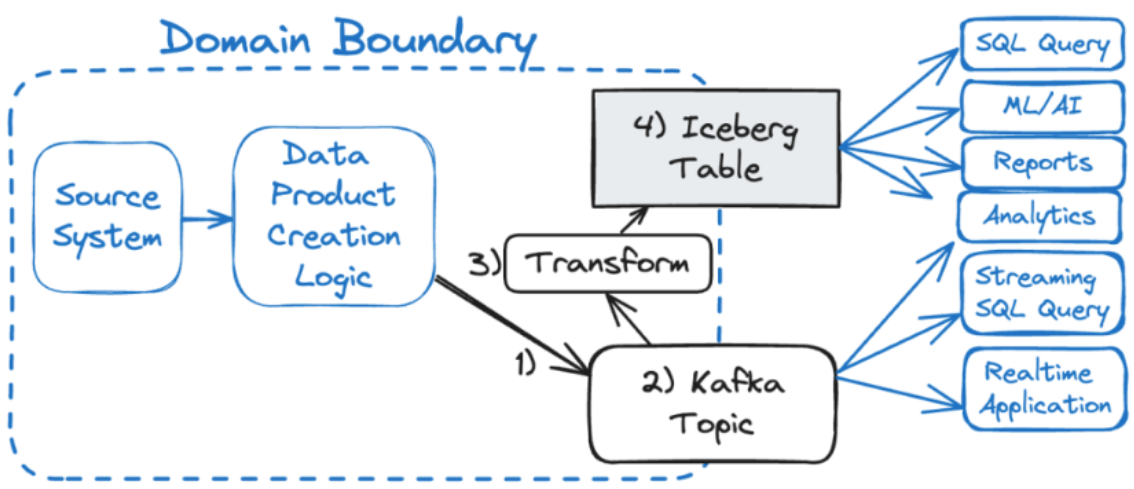

The logical top level of data in a headless data architecture is the data product, which you may already be familiar with from the data mesh approach. In the headless data architecture, a data product is composed of a stream (powered by Apache Kafka®) and its related table (powered by Apache Iceberg™). The data that is written to the stream is automatically appended to the table as well so that you can access the data either as a Kafka topic or as an Iceberg table.

The following figure shows a stream/table data product created from a source system. First you write data to the stream. Then you can optionally transform data from the stream, ultimately materialising it into an Iceberg table.

You can use the stream (the Kafka topic) to power low-latency business operations such as order management, vehicle dispatch, and financial transactions. Meanwhile, you can also plug in batch query heads into the Iceberg table to compute higher-latency workloads, like daily reporting, customer analytics, and periodic AI training.

A data product is a trustworthy data set that’s purpose-built to share and reuse with other teams and services. It’s a formalisation of responsibilities, technology, and processes to simplify getting the data you and your services need. You may also hear data products referred to as reusable data assets, though the essence remains the same—shareable, reusable, standardised, and trustworthy data.

The data product creation logic depends heavily on the source system. For example:

- An event-driven application writes its output directly to a Kafka topic, which can be easily materialised into an Iceberg table. The data product creation logic may be quite minimal, for example, masking confidential fields or dropping them completely.

- A conventional request/response application uses change data capture (CDC) to extract data from the underlying database, convert it to events, and write it to the Kafka topic. The CDC events contain a well-defined schema based on the source table, and you can perform further transformations of the data using either the connector itself or something more powerful like FlinkSQL.

- A SaaS application may require periodic polling of an endpoint using Kafka Connect to write to the stream.

The elegance of a stream-first data product is that your only requirement is to write it to the stream. You do not have to manage a distributed transaction to write to both the stream and table simultaneously (which is pretty hard to do properly and can also be relatively slow). Instead, you create an append-only Iceberg table from the stream via Kafka Connect or a proprietary SaaS stream-to-table solution like Confluent’s Tableflow. Fault tolerance and exactly-once writes can help keep your data integrity in check, so that you get the same results regardless of whether you read from the stream or the table.

Selecting data sets for shifting left

Shift left is not all or nothing. In fact, it’s incredibly modular and incremental. You can selectively choose which loads to shift left, and which to leave as is. You can set up a parallel shift-left solution, validate it, and then swap your existing jobs over to it once satisfied. The process looks something like this:

- Select a commonly used data set in your analytics plane. The more commonly used the data set is, the better a candidate it is for shifting left. Business-critical data that has little room for error (such as billing information) are also good candidates for shifting left.

- Identify the source of the data in the operational plane. This is the system that you’re going to need to work with to create a stream of data. Note that if this system is already event-driven, you may already have a stream available and can skip to the fourth step below.

- Create a source-to-stream workflow in parallel to the existing ETL pipeline. You may need to use a Kafka connector (e.g., CDC) to convert database data to a stream of events. Alternatively, you can choose to produce the events directly to the stream; just ensure that you write the complete data set so it remains consistent with the source database.

- Create a table from the stream. You can use Kafka Connect to generate the Iceberg table, or you can rely on automated third-party proprietary services to provide you with an Iceberg table. Full disclosure: Using Kafka Connect results in a copy of the data written as an Iceberg table. In the near future, expect to see third-party services offer the ability to scan a Kafka topic as an Iceberg table without making a second copy of the data.

- Plug the table into your existing data lake, alongside the data in the silver layer. Now you can validate that the new Iceberg table is consistent with the data in your existing data set. Once you are satisfied, you can migrate your data analytic jobs off the old batch-created table, deprecate it, and then remove it at your convenience.

Additional headless data architecture condisderations

As discussed in the previous article, you can plug your Iceberg table into any compatible analytical endpoint without copying the data over. For data streams, it’s the same story. In both cases, you simply select the processing head and plug it into the table or stream as needed.

Shifting left also unlocks some powerful capabilities absent from your typical copy-and-paste, multi-hop, medallion architecture. You can manage stream and table evolution together from a single logical point, validating that stream evolutions won’t break your Iceberg table.

Because the work has been shifted left out of the data analytics space, you can integrate data validations and tests into the source application deployment pipelines. This can help prevent breakages from occurring before code goes into production, instead of detecting it long after the fact downstream.

Finally, since your table is derived from the stream, you only have to fix it in one place—whatever you write to the stream will propagate to the table. Streaming applications will automatically receive the corrected data and can self-correct. However, periodic batch jobs that use the table will need to be identified and rerun. But this is identical to what you would need to do in a conventional multi-hop architecture anyway.

A headless data architecture unlocks unparalleled data access across your entire organisation. But it starts with a shift left.

If you would like to discover how Sida4 can utilise Confluent to implement headless data architecture for seamless data integration, then let’s talk.

Publishing note: This article was originally published under the 4impact brand and is now represented by Sida4, their data enablement and integration focused sister company.

insights